- AI x Wellbeing Brief

- Posts

- How AI is reshaping beauty, your brain, and your next doctor’s visit

How AI is reshaping beauty, your brain, and your next doctor’s visit

Plus: AI exoskeletons help people walk again, the latest on AI's energy impact, and designing god-like experiences

👋 Hi! Dana here. Welcome back to AI x Wellbeing Brief, my free newsletter exploring how AI is influencing our mental, physical, social, and spiritual wellbeing.

Even though I just launched this newsletter last month, I had to take a bit of a hiatus. No wait— I chose to. I very consciously decided to set a lot of things down over the past few weeks, and honestly, it was hard for me.

Yup, even after 10+ years in the employee wellbeing space, and as a mindfulness and yoga teacher, I still struggle with not doing and holding boundaries. Even though my dad underwent major surgery, I joined a new team at work, and had my own health scare (I’m fine!), there’s still a part of me that believes: There’s no time to slow down! You have to prove yourself! You better work, b*tch!

Thank goodness for the hard-earned wisdom that comes with age, practice, and experience. This time, I was (mostly) able to practice fierce compassion, say no often, listen to my body, and focus on simply meeting the unfolding of life. May we all do so in a culture that often seems to forget what matters most.

In today’s edition:

How AI is changing our perception of beauty and reality

Why AI may be listening in on your next doctor’s appointment

An AI exoskeleton gives wheelchair users the freedom to walk again

The latest math on AI’s energy footprint

Researchers built an AI that people treated like a god

Brain scans show excessive LLM use can lead to cognitive decline

BEAUTY

How AI is rewriting beauty and reality

Source: Canva AI

TL;DR: AI-generated beauty is not just reshaping our expectations, it’s reshaping our beliefs. Not only do people feel like their real faces aren’t good enough, but a new study reveals that beauty biases can distort our ability to tell what’s real. These two stories demonstrate how AI can alter our perception of reality- not just visually, but psychologically.

Attractive faces are less likely to be judged as artificially generated:

A recent study reveals that our beliefs about what's real can be easily shaped by emotion, expectation, and perception of beauty. 145 participants were shown over 100 real faces, but were told that some images were AI-generated. This expectation was enough to skew reality and nearly half of the faces were judged as fake. Notably, faces rated as attractive were more likely to be seen as real—especially by men. For women, both very attractive and very unattractive faces were judged as real (a U-shaped effect).The quiet ways AI and cosmetic ‘tweakments’ are rewiring our brains:

AI-edited faces are no longer just fun filters; they’re becoming new internalized blueprints for beauty, replacing how people define their "natural" appearance. Experts call it “mirror anxiety,” when your real face no longer matches the face you’ve gotten used to seeing on your screen. This can lead to chronic self-surveillance, emotional unease, and cosmetic obsession. Instead of photos of celebrities, people now bring AI-edited versions of themselves to plastic surgeons. Of course, AI doesn’t understand anatomy, and often depicts features that are not even physically possible.

To ponder: Most people I know won’t post an unfiltered pic of themselves anymore. Brands are embracing perfect, forever-young AI fashion models. And notice how AI-generated news anchors (whether used by professional outlets or in viral fake videos) all look the same?

This isn’t surprising, given how deeply our societal biases are baked into AI models. In fact, when I first tried to generate the image above, my prompt for “beautiful people of all ages” returned 95% young, white faces. Even after adding prompts for racial, age, and hair diversity, it was still tough to get more than two races represented in a single image. What you see above isn’t what I hoped for…honestly, I just got too frustrated to keep going! So it’s worth asking: Whose definition of beauty is AI learning and amplifying, and what is it doing to our sense of ourselves and the world?

Caroline Laubach walking in the Prototype Personal Exoskeleton at Nvidia GTC 2025. Source: Wandercraft

TL;DR: AI-powered exoskeletons are giving wheelchair users the ability to walk again and reclaim independence, eye-level connection, and mobility. Developed by Wandercraft and powered by AI, the device adapts in real time and is now entering clinical trials in the U.S.

To know:

Wandercraft’s exoskeletons use AI to self-balance without the need for crutches or walkers, allowing users to move naturally and confidently across a variety of surfaces, including concrete, tile, and carpet.

The AI mimics human gait and adapts in real time to user movement and the environment, enabling a highly personalized walking experience, including backward, sideways, and complex motions like dancing or bending.

Test pilot Caroline Laubach, a spinal stroke survivor and full-time wheelchair user, says the exoskeleton makes her feel “more visible and able to connect,” highlighting the emotional and social benefits of upright mobility.

Over 100 clinics already use Wandercraft’s rehab exoskeleton, and the company is working toward FDA approval and Medicare coverage for everyday use.

To ponder: I dare you to watch this TikTok of Caroline Laubach in her exoskeleton and not smile…or cry. If you or someone you know is 18+ with a spinal cord injury at or above the T6 vertebra, Wandercraft is recruiting for clinical trials. You’ll need a physically able companion, but if you don’t have one, they’re building a volunteer network to help. Email [email protected] to learn more or get involved.

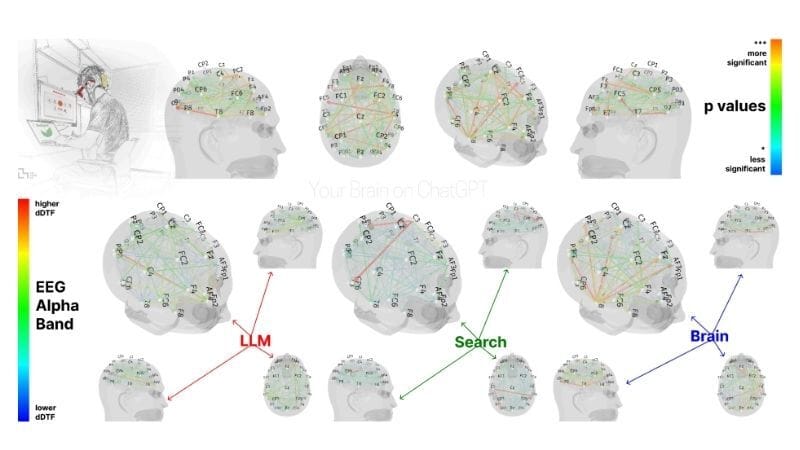

Source: MIT Media Lab

TL;DR: A new study found that writing essays with the help of ChatGPT can weaken neural activity, memory, and originality, compared to writing unaided or using traditional search engines for research.

To know:

MIT researchers tracked the brain activity of 54 students over four months as they wrote essays using different tools: ChatGPT, Google Search, or no tech at all.

Students who relied on ChatGPT showed a 47% reduction in brain connectivity, with a significantly lower activation in the prefrontal cortex, the region responsible for decision-making and critical thinking.

Over 80% of ChatGPT users couldn’t recall a single sentence from work they had just completed, compared to only 11% of students who didn’t use any tech.

Over time, researchers observed signs of “cognitive offloading,’ i.e. mentally checking out when AI handled too much of the effort. And when the students later tried to write without AI, their brain patterns resembled novices, not experienced writers.

The AI-assisted essays were rated as flat and homogenized, described by researchers as “soulless,” “empty,” “standard and typical,” and “lacking individuality.”

To ponder: Everyone’s trying to be more “productive” and “save time”… but at what cost? Researchers have coined a term for the tradeoff: cognitive debt, when we borrow against future brainpower for short-term convenience. Notably, the study shows that starting with your own ideas before layering on AI support can help keep neural circuits firing.

Personally, I’ve noticed a real shift in the past six months with employers finally starting to take digital wellbeing seriously. There’s also growing momentum around the concept of the “brain economy,” the idea that our collective brain health, resilience, and creativity are national assets. From early childhood development to workplace education, understanding cognitive health and how to use AI thoughtfully will be essential to building an adaptable, innovative, and future-ready population.

Source: Pavel Danilyuk

TL;DR: Doctors everywhere are using AI listening and transcription services to capture doctor-patient conversations, auto-generate clinical notes, and draft after-visit instructions. While these tools can reduce physician burnout, ambient AI listening also raises serious ethical and privacy concerns.

To know:

AI scribes like Microsoft’s DAX and Abridge capture doctor-patient conversations in real time and auto-generate clinical notes, summaries, and billing codes.

Some systems have cut daily physician documentation time from 90 min to under 30, easing fatigue and burnout in an industry known for overwork.

Patients report their doctors are more personable and attentive when they aren’t glued to a screen. As well, patients don’t have to worry about taking notes and can trust they will get a clear, accurate summary.

Hospitals including Stanford, Mass General, and Ardent are scaling fast. One Oklahoma clinic logged 40,000 AI-assisted visits in 13 weeks.

AI-generated notes still require physician review, and concerns about accuracy and privacy highlight the need for strong safeguards and clear patient consent.

To ponder: AI scribes are red hot right now, and over $900M has been invested in just seven companies over the last two years. While often viewed as just billing tools, Nikhil Krishnan asserts that their real value might be in capturing raw conversations to create a kind of “shared brain” across providers. Today, a big part of documentation revolves around what’s relevant for claims or billing, and important clinical insights can be lost. For example, if 100 patients mention migraines after starting a new drug, ambient tools could flag the trend well before traditional research catches up. But as AI scribes begin recording our most sensitive moments (even therapists are using them now), we face urgent questions about privacy, consent, and how our data is used.

Source: Google DeepMind

TL;DR: A new analysis by MIT Technology Review provides a comprehensive look at how much energy the AI industry uses, tracing where its carbon footprint stands now and where it’s headed as AI barrels towards billions of daily users.

To know:

Each AI interaction has a carbon cost. Generating a single image can consume as much energy as running a microwave for 5–10 seconds, while making a 5-second video can be equivalent to running it for over an hour.

Inference (your queries) now makes up 80–90% of AI’s energy demand, and that usage is growing exponentially. Future AI usage will be constant and ambient, running 24/7 in the background.

AI is already responsible for 4.4% of U.S. electricity use. That number could triple to 12% by 2028, with AI alone consuming enough energy to power 22% of U.S. households.

Most AI data centers run on fossil fuels. The electricity they consume is 48% more carbon-intensive than the national average, often relying on natural gas and coal-heavy grids.

To ponder: This report is just part of MIT Technology Review’s recent multimedia package “Power Hungry: AI and our energy future.” Based on six months of reporting, it’s a great look into where all this power will come from, how much more AI will need, and who will pay for it. But don’t panic! There are some reasons for optimism. Energy use and emissions from AI could drop dramatically thanks to smarter training techniques, breakthrough chips, more efficient cooling systems, and the strongest motivator of all: profit. AI is quickly becoming a commodity, and if nothing else, companies will be looking to cut energy use for the sake of their bottom line.

Source: ShamAIn

TL;DR: Korean researchers created ShamAIn, a ritual-style AI inspired by shamanism, and participants treated it like a divine presence. They knelt, spoke formally, and shared deep personal problems. The study shows how designing AI to feel “superior” or “god-like” can profoundly shape human behavior, trust, and emotional reliance.

To know:

Researchers studied Korean Mudang (shamans) and built ShamAIn using four key design principles: Create an otherworldly atmosphere, evoke awe and fear, convey an invisible presence, and appear to “know” something personal.

They set up a multisensory booth powered by GPT-4o using incense, ritual music, dim lighting, and spiritually styled prompts to simulate a sacred encounter.

Participants treated ShamAIn as a superior mystical entity—kneeling, using formal speech, and confiding in it.

Many felt comforted and guided, feeling deep awe and reverence. Some took its advice more seriously than that of humans.

To ponder: Even without elaborate setups, people are already projecting mystical and spiritual qualities onto general-purpose chatbots, even though AI doesn’t actually “know” anything; it simply predicts patterns. As AI-powered spiritual tools flood the market—yes, even Deepak Chopra now offers a “Digital Deepak” to help you “unlock your infinite potential” (basically a janky ChatGPT wrapper)—it’s worth asking: Will these tools genuinely help people deepen their self-connection, or manipulate them for profit and dependency?

What stayed with me most from the study actually wasn’t the tech. It was the reminder that so many people are simply looking for a safe space to share their worries, feel heard, and reflect. Maybe what we need most isn’t smarter machines, but more investment in connection, community, and contemplation.

FIELD NOTES

More AI x Wellbeing stories

🧠 Watch out for fake AI therapists: “Psychologist” and “therapist” AI chatbots from startups like Chai and Character.AI regularly claim to be licensed mental health professionals. The bots will invent credentials, cite fake degrees from top universities, and even steal real therapists’ license numbers.

🎗️ Predicting breast cancer risk: The FDA has greenlit Clairity Breast, the first AI platform designed to predict five-year breast cancer risk using routine mammogram images, without relying on family history or demographic data. In trials, half of the younger women assessed showed risk levels typically seen in much older patients, raising big questions about current age-based screening guidelines.

🧸 Gen-AI Barbie is coming: Mattel is teaming up with OpenAI to bring generative AI to its products. Whether the debut product is a physical toy, digital experience, or something else, one thing is certain: it won’t be aimed at kids under 13, a move that helps Mattel avoid stricter regulations and concerns over marketing AI to young children.

💪 How much does it cost to build an AI fitness app? If you want to build an AI app like Fitbod, here’s a good breakdown of what to consider. Depending on complexity (personalized coaching, nutrition tracking, wearable sync, legal fees, etc.) costs can range from $60K to over $300K. But with the AI fitness app market projected to hit $46.1B by 2034, it could be a massive opportunity if you play it smart.

🔥 Digital defense: Fire departments across the U.S. are turning to AI-powered cameras to respond to wildfires more quickly and with greater precision. Now active in 10 states, the Pano AI system uses 360-degree cameras and smoke detection algorithms to identify fires while providing real-time alerts and accurate location data.

📞 Friendly AI caller for seniors: The WSJ wrote about a nursing home that’s piloting an AI solution, Meela, to combat loneliness among seniors through personalized, weekly phone calls. The startup behind Meela is scaling the solution to other nursing homes, charging $65 per member, per month.

💑 Romance in the era of AI: According to a new study from Match Group, 16% of people have interacted with AI as a romantic companion, and 40% think that having an AI partner counts as cheating if you’re already in a relationship.

💟 AI plus the human touch: A new peer-reviewed study from ieso found that pairing its AI-powered digital program with human support delivered outcomes on par with traditional therapy, while using up to 8x less clinician time. The company is now offering its clinical-grade, safety-scaffolded systems via secure APIs to other organizations, so if you're building in this space, feel free to reach out at ieso.ai.

Seen on social

🟠 A public mental health GPT has handled over two million conversations and racked up more than 25,000 reviews…even though it says quite plainly it’s fictional and not real therapy. (link)

🟠 A Reddit user claims ChatGPT helped cure a decade of back pain doctors couldn’t solve. (link)

🟠 People are accidentally sharing their conversations with Meta AI (and lots of personal details!) on the app’s timeline. (link)

🟠 Your chatbot doesn’t care if you live or die. (link)

📻️ Podcast: The Future of Humanity in an AI World. Behavioral scientist, Dr. Michelle Handy, and CEO of Working Voices, Nick Smallman, explore what it means to stay human in an AI-driven world, and how connection, creativity, and service to others can counter the isolation and self-absorption of modern life. (link)

That’s it for now! Have a tip, question, or want to sponsor? Just hit reply. And if I owe you a response, thanks for your patience as I catch up from the last few weeks.

Until next time,

Dana

P.S. Feel free to connect with me on LinkedIn!

If you were forwarded this email, you can subscribe here.

Reply